I watch a lot of Science Fiction and there are a lot of these shows and movies talk about AI running amock. From Skynet to the Borg there is a lot of interesting AI shows. The following is from the web site https://interestingengineering.com:

"As society gets closer to human-level AI, scientists debate what it means to exist.

Imagine you undergo a procedure in which every neuron in your brain is gradually replaced by functionally-equivalent electronic components. Let’s say the replacement occurs a single neuron at a time, and that behaviorally, nothing about you changes. From the outside, you are still “you,” even to your closest friends and loved ones.

What would happen to your consciousness? Would it incrementally disappear, one neuron at a time? Would it suddenly blink out of existence after the replacement of some consciousness-critical particle in your posterior cortex? Or would you simply remain you, fully aware of your lived experience and sentience (and either pleased or horrified that your mind could theoretically be preserved forever)?

This famous consciousness thought experiment, proposed by the philosopher David Chalmers in his 1995 paper Absent Qualia, Fading Qualia, Dancing Qualia, raises just about every salient question there is in the debate surrounding the possibility of consciousness in artificial intelligence.

If the prospect of understanding the origins of our own consciousness and that of other species is, as every single person studying it will tell you, daunting, then replicating it in machines is ambitious to an absurd degree.

Will AI ever be conscious? As with all things consciousness-related, the answer is that nobody really knows at this point, and many think that it may be objectively impossible for us to understand if the slippery phenomenon ever does show up in a machine.

Take the thought experiment just described. If consciousness is a unique characteristic of biological systems, then even if your brain’s robotic replacement allowed you to function in exactly the same manner as you had before the procedure, there would be no one at home on the inside, and you’d be a zombie-esque shell of your former self. Those closest to you would have every reason to take your consciousness as a given, but they’d be wrong.

The possibility that we might mistakenly infer consciousness on the basis of outward behavior is not an absurd proposition. It’s conceivable that, once we succeed in building artificial general intelligence—the kind that isn’t narrow like everything out there right now—that can adapt and learn and apply itself in a wide range of contexts, the technology will feel conscious to us, regardless of whether it actually is or not.

Imagine a sort of Alexa or Siri on steroids, a program that you can converse with, that is adept as any human at communicating with varied intonation and creative wit. The line quickly blurs.

That said, it might not be necessary, desirable, or even possible for AI to attain or feature any kind of consciousness.

In Life 3.0: Being Human in the Age of Artificial Intelligence, Max Tegmark, professor of physics at MIT and president of the Future of Life Institute, laments, “If you mention the "C-word” to an AI researcher, neuroscientist, or psychologist, they may roll their eyes. If they’re your mentor, they might instead take pity on you and try to talk you out of wasting your time on what they consider a hopeless and unscientific problem.”

It’s a reasonable, if not slightly dismissive, position to take. Why even bother taking the consciousness problem into account? Tech titans like Google and IBM have already made impressive strides in creating self-teaching algorithms that can out-think and out-pace (albeit in narrowly-defined circumstances) any human brain, and deep-learning programs in the field of medicine are also out-performing doctors in some areas of tumor identification and blood work assessment. These technologies, while not perfect, perform well, and they’re only getting better at what they do.

Douglas Hofstadter, the pioneering phenomenologist who wrote the Pulitzer Prize-winning Gödel, Escher, Bach: An Eternal Golden Braid, is among those who think we absolutely need to bother, and for good reason.

In a 2013 interview with The Atlantic, Hofstadter explains his belief that we’re largely missing the point if we don’t take things like the nature of conscious intelligence into account. Referencing Deep Blue, the famous IBM-developed chess program that beat Gary Kasparov in 1997, he says, “Okay, [...] Deep Blue plays very good chess—so what? Does that tell you something about how we play chess? No. Does it tell you about how Kasparov envisions, understands a chessboard?”

Hofstadter’s perspective is critical. If these hyper-capable algorithms aren’t built with a proper understanding of our own minds informing them, an understanding that is still very much inchoate, how could we know if they attain conscious intelligence? More pressingly, without a clear understanding of the phenomenon of consciousness, will charging into the future with this technology create more problems than it solves?

In Artificial Intelligence: A Guide for Thinking Humans, Melanie Mitchell, a former graduate student of Hofstadter, describes the fear of reckless AI development that her mentor once expressed to a room full of Google engineers at a 2014 meeting at the company’s headquarters in Mountainview, California.

“I find it very scary, very troubling, very sad, and I find it terrible, horrifying, bizarre, baffling, bewildering, that people are rushing ahead blindly and deliriously in creating these things.”

That’s a fair amount of unsavory adjectives to string together. But when language like that comes from someone the philosopher Daniel Dennet says is better than anybody else at studying the phenomena of the mind, it makes you appreciate the potential gravity of what’s at stake.

Conscious AI: not in our lifetime

While Hofstadters’ worries are perfectly valid on some level, others, like Mitch Kapor, the entrepreneur and co-founder of the Electronic Frontier Foundation and Mozilla, think we shouldn’t work ourselves into a panic just yet. Speaking to Vanity Fair in 2014, Kapor warns, “Human intelligence is a marvelous, subtle, and poorly understood phenomenon. There is no danger of duplicating it anytime soon.”

Tegmark labels those who feel as Kapor does, that AGI is hundreds of years off, “techno-skeptics.” Among the ranks of this group are Rodney Brooks, the former MIT professor and inventor of the Roomba robotic vacuum cleaner, and Andrew Ng, former chief scientist at Baidu, China’s Google, who Tegmark reports as having said that, “Fearing a rise of killer robots is like worrying about overpopulation on Mars.”

That might sound like hyperbole, but consider the fact that there is no existing software that even comes close to being able to rival the brain in terms of overall computing ability.

Before his death in 2018, Paul Allen, Microsoft co-founder and founder of the Allen Institute for Brain Science, wrote alongside Mark Greaves in the MIT Technology Review that achieving singularity, the point where technology develops beyond the human ability to monitor, predict, or understand it, will take far more than just designing increasingly competent machines:

“To achieve the singularity, it isn’t enough to just run today’s software faster. We would also need to build smarter and more capable software programs. Creating this kind of advanced software requires a prior scientific understanding of the foundations of human cognition, and we are just scraping the surface of this. This prior need to understand the basic science of cognition is where the “singularity is near” arguments fail to persuade us.”

Like-minded individuals like Naveen Joshi, the founder of Allerin, a company that deals in big data and machine learning, assert that we’re “leaps and bounds” away from achieving AGI. However, as he admits in an article in Forbes, the sheer pace of our development in AI could easily change his mind.

It's on the hor-AI-zon

It’s certainly possible that the scales are tipping in favor of those who believe AGI will be achieved sometime before the century is out. In 2013, Nick Bostrom of Oxford University and Vincent Mueller of the European Society for Cognitive Systems published a survey in Fundamental Issues of Artificial Intelligence that gauged the perception of experts in the AI field regarding the timeframe in which the technology could reach human-like levels.

The report reveals “a view among experts that AI systems will probably (over 50%) reach overall human ability by 2040-50, and very likely (with 90% probability) by 2075.”

Futurist Ray Kurzweil, the computer scientist behind music-synthesizer and text-to-speech technologies, is a believer in the fast approach of the singularity as well. Kurzweil is so confident in the speed of this development that he’s betting hard. Literally, he’s wagering Kapor $10,000 that a machine intelligence will be able to pass the Turing test, a challenge that determines whether a computer can trick a human judge into thinking it itself is human, by 2029.

Shortly after that, as he says in a recent talk with Society for Science, humanity will merge with the technology it has created, uploading our minds to the cloud. As admirable as that optimism is, this seems unlikely, given our newly-forming understanding of the brain and its relationship to consciousness.

Christof Koch, an early advocate of the push to identify the physical correlates of consciousness, takes a more grounded approach while retaining some of the optimism for human-like AI appearing in the near future. Writing in Scientific American in 2019, he says, “Rapid progress in coming decades will bring about machines with human-level intelligence capable of speech and reasoning, with a myriad of contributions to economics, politics and, inevitably, warcraft.”

Koch is also one of the contributing authors to neuroscientist Guilio Tononi’s information integration theory of consciousness. As Tegmark puts it, the theory argues that, “consciousness is the way information feels when being processed in certain complex ways.” IIT asserts that the consciousness of any system can be assessed by a metric of 𝚽 (or Phi), a mathematical measure detailing how much causal power is inherent in that system.

In Koch's book, The Quest for Consciousness: A Neurobiological Approach, Koch equates phi to the degree to which a system is, “more than the sum of its parts.” He argues that phi can be a property of any entity, biological or non-biological.

Essentially, this measure could be used to denote how aware the inner workings of a system are of the other inner workings of that system. If 𝚽 is 0, then there is no such awareness, and the system feels nothing.

The theory is one of many, to be sure, but it's notable for its attempt at mathematical measurability, helping to make the immaterial feeling of consciousness something tangible. If proven right, it would essentially preclude the possibility of machines being conscious, something that Tononi elaborates on in an interview with the BBC:

“If integrated information theory is correct, computers could behave exactly like you and me – indeed you might [even] be able to have a conversation with them that is as rewarding, or more rewarding, than with you or me – and yet there would literally be nobody there.”

Optimism of a (human) kind

The interweaving of consciousness and AI represent something of a civilizational, high-wire balancing act. There may be no other fields of scientific inquiry in which we are so quickly advancing while having so little an idea of what we’re potentially doing.

If we manage, whether by intent or accident, to create machines that experience the world subjectively, the ethical implications would be monumental. It would also be a watershed moment for our species, and we would have to grapple with what it means to have essentially created new life. Whether these remain a distant possibility or await us just around the corner, we would do well to start considering them more seriously.

In any case, it may be useful to think about these issues with less dread and more cautious optimism. This is exactly the tone that Tegmark strikes at the end of his book, in which he offers the following analogy:

“When MIT students come to my office for career advice, I usually start by asking them where they see themselves in a decade. If a student were to reply “Perhaps I’ll be in a cancer ward, or in a cemetery after getting hit by a bus,” I’d give her a hard time [...] Devoting 100% of one’s efforts to avoiding diseases and accidents is a great recipe for hypochondria and paranoia, not happiness.”

Whatever form the mind of AGI takes, it will be influenced by and reflect our own. It seems that now is the perfect time for humanity to prioritize the project of collectively working out just what ethical and moral principles are dear to us. Doing so would not only be instructive in how to treat one another with dignity, it would help ensure that artificial intelligence, when it can, does the same."

Reference: https://interestingengineering.com/will-ai-ever-be-conscious

I have lost a lot of faith with the Medical Community and the Governments over the last several years, but there are a few good things that can raise above the corruption and the pushing of drugs a new approach to heal people. The following is from www.gaia.com and written by Hunter Parsons that does not involve any drug or pushing an ineffective so called vaccine that the drug company is not held accountable in any way but they use sound! The use of sound can regrow bone tissue! Here is the story:

"The future of regenerative medicine could be found within sound healing by regrowing bone cells with sound waves.

The use of sound as a healing modality has an ancient tradition all over the world. The ancient Greeks used sound to cure mental disorders; Australian Aborigines reportedly use the didgeridoo to heal; and Tibetan or Himalayan singing bowls were, and still are, used for spiritual healing ceremonies.

Recently, a study showed an hour-long sound bowl meditation reduced anger, fatigue, anxiety, and ...

Not a fan of a Defense Agency studying Anti-Gravity and other Exotic Tech, but if the commercial world and make this technology cheap that will change our world yet again. The following is about three minute read and from www.gaia.com. The below was written by Hunter Parsons:

"Wormholes, invisibility cloaks, and anti-gravity — it’s not science fiction, it’s just some of the exotic things the U.S. government has been researching.

A massive document dump by the Defense Intelligence Agency shows some of the wild research projects the United States government was, at least, funding through the Advanced Aerospace Threat Identification Program known as AATIP.

And another lesser-known entity called the Advanced Aerospace Weapons System Application Program or AAWSAP

The Defense Intelligence Agency has recently released a large number of documents to different news outlets and individuals who have filed Freedom of Information Act requests.

Of particular interest are some 1,600 pages released to Vice News, which ...

As our technology gets better we are discovering more about the history of mankind and pushing the timeline back further and further. The following article is from www.gaia.com and written by Michael Chary that discusses this new find that changes the historical timeline:

"Over the past decade, there have been a number of archeological revelations pushing back the timeline of human evolution and our ancient ancestors’ various diasporas. Initially, these discoveries elicit some resistance as archeologists bemoan the daunting prospect of rewriting the history books, though once enough evidence is presented to established institutions, a new chronology becomes accepted.

But this really only pertains to the era of human development that predates civilization — the epochs of our past in which we were merely hunter-gatherers and nomads roaming the savannahs. Try challenging the consensus timeline of human civilization and it’s likely you’ll be met with derision and rigidity.

Conversely, someone of an alternative...

Not sure if you have heard of a show on YouTube called "The Why Files". If not you should check it out it is interesting and has some humor with it on different subjects. Last weeks was on a different theory how the Universe works and how main stream Science is attempting to shut it down like is always seems to do if it goes aguest some special interest. Today it is akin to what happened to those who questioned the Earth was the Center of the Universe that main stream so called Science all believed during the Renaissance period, They called any theory that the Earth was not the Center of the Universe misinformation. Does this sound familiar today? People laughed and mocked people like Leonardo da Vinci, Nicolaus Copernicus, Georg Purbach as crack-pots, conspiracy theorists, nut-jobs and they were suppressed and even imprisoned for their radical thoughts and observations. Again it sounds like today in so many ways. In any event this is a good one to ponder and see even if a bad idea ...

Seemingly chaotic systems like the weather and the financial markets are governed by the laws of chaos theory.

We all have heard about chaos theory, but if you have not or have forgotten what chaos theory is well here you go from interestingengineering.com:

"Chaos theory deals with dynamic systems, which are highly sensitive to initial conditions, making it almost impossible to track the resulting unpredictable behavior. Chaos theory seeks to find patterns in systems that appear random, such as weather, fluid turbulence, and the stock market.

Since the smallest of changes can lead to vastly different outcomes, the long-term behavior of chaotic systems is difficult to predict despite their inherently deterministic nature.

As Edward Lorenz, who first proposed what became commonly known as the Butterfly Effect, eloquently said, "Chaos: When the present determines the future, but the approximate present does not approximately determine the future.""

You may have heard the term about chaos theory as a butterfly flaps its wings in Brazil,...

I for one have lost trust in Medical Doctors due to COVID and reflection that they seem to push pills for everything and untested so called vaccines that is using a unproven technology because the Government and the Medical Boards of the State told them to. There are a very few exceptions. Thus they do not address the key problem just prescribe more and more pills to keep you alive an sick longer for them and Big Phama to profit from you. Will AI do any better? Well that depends on what was used for the training of AI. If it also pushes pills and vaccines without question then you have the same problems noted above. However, if the AI Training includes all possible forms of treatment and they zero in on the right issues for the true problem then there is possibilities they would be way better than most of the current Medical Doctors today.

The following is from an article from interestingengineering.com and written by Paul Ratner:

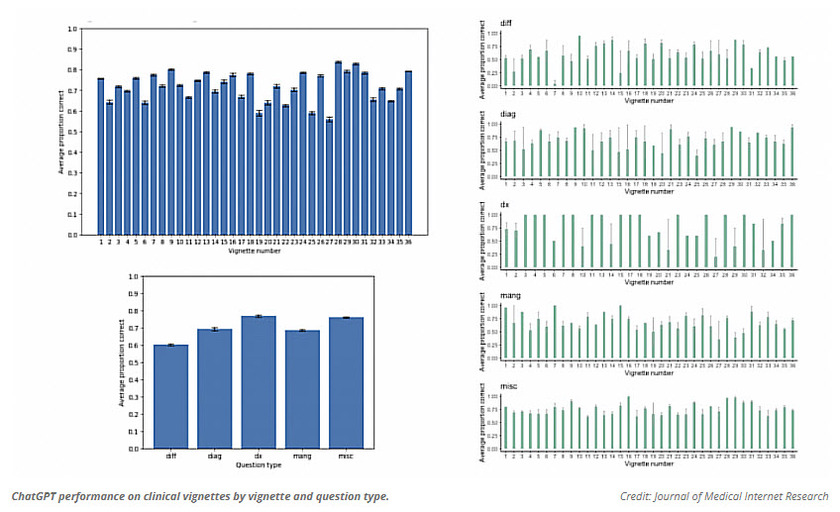

"A new study looks at how accurately AI can diagnose patients. We interview the researcher, who weighs in on AI's role ...